27) In order to use the t-statistic for hypothesis testing and constructing a 95% confidence interval as

1.96 standard errors, the following three assumptions have to hold:

A) the conditional mean of ui, given Xi is zero; (Xi,Yi), i = 1,2, …, n are i.i.d. draws from their joint

distribution; Xi and ui have four moments

B) the conditional mean of ui, given Xi is zero; (Xi,Yi), i = 1,2, …, n are i.i.d. draws from their joint

distribution; homoskedasticity

C) the conditional mean of ui, given Xi is zero; (Xi,Yi), i = 1,2, …, n are i.i.d. draws from their joint

distribution; the conditional distribution of ui given Xi is normal

D) none of the above

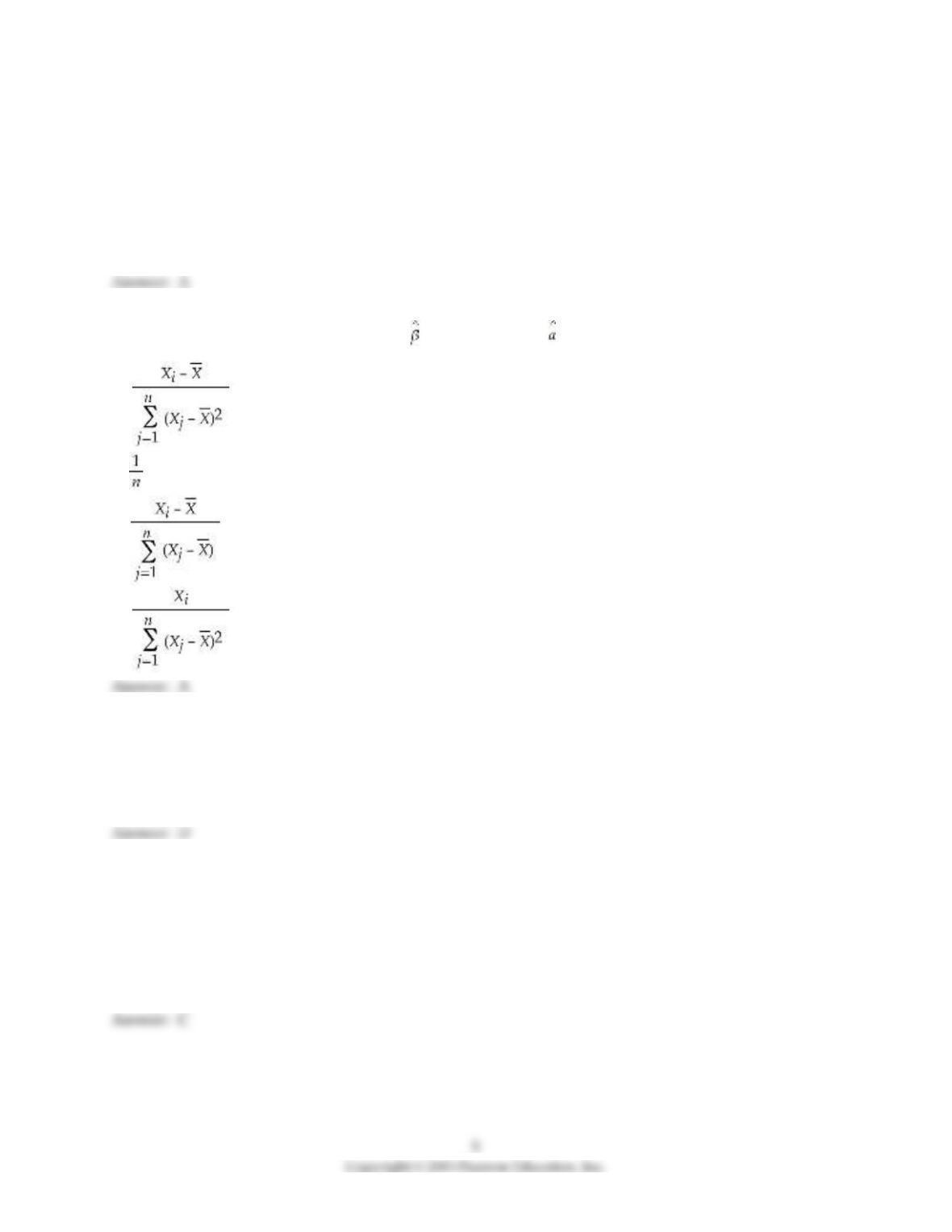

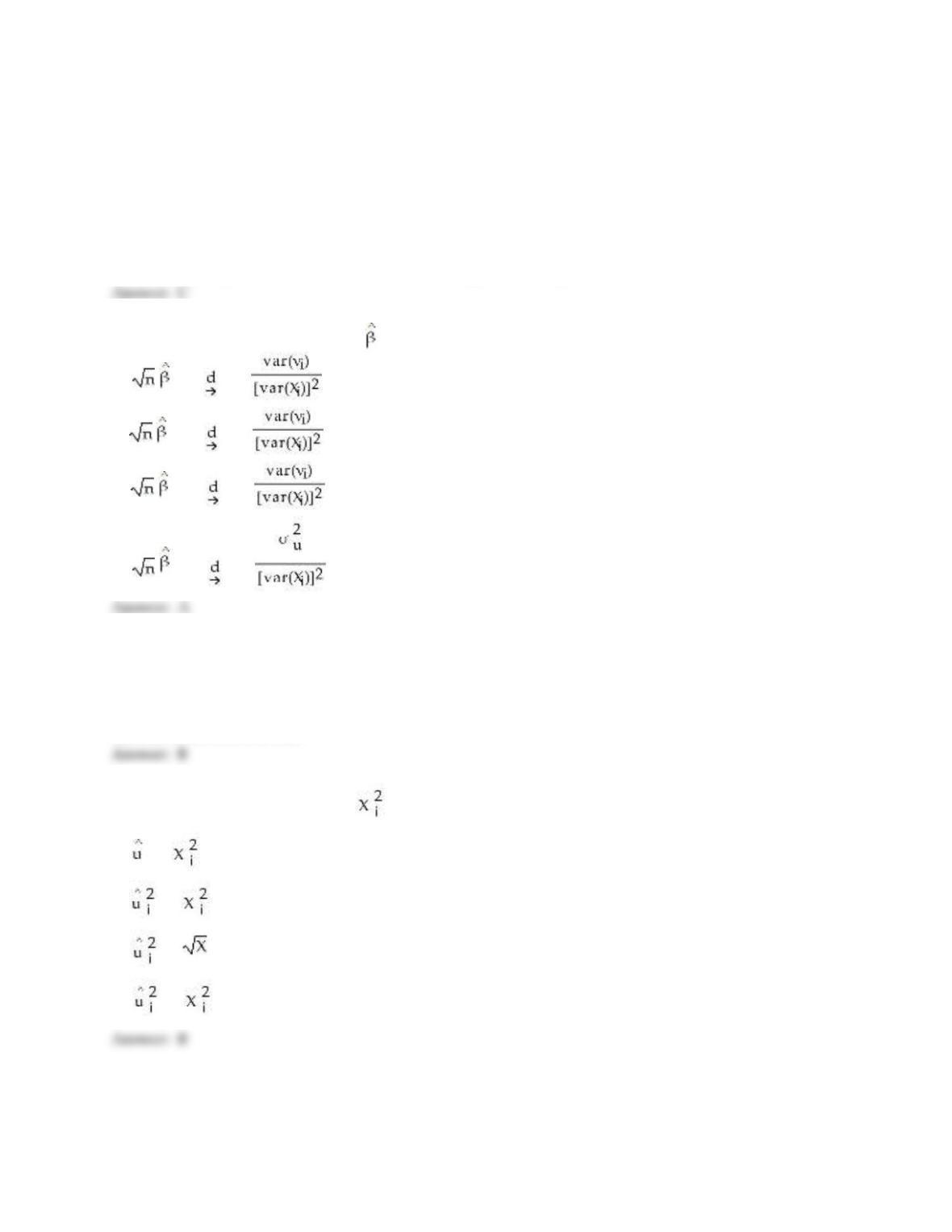

28) If the variance of u is quadratic in X, then it can be expressed as

A) var(ui|Xi) =

B) var(ui|Xi) = θ0 + θ1

C) var(ui|Xi) = θ0 + θ1

D) var(ui|Xi) =

29) In practice, you may want to use the OLS estimator instead of the WLS because

A) heteroskedasticity is seldom a realistic problem

B) OLS is easier to calculate

C) heteroskedasticity robust standard errors can be calculated

D) the functional form of the conditional variance function is rarely known

30) If the functional form of the conditional variance function is incorrect, then

A) the standard errors computed by WLS regression routines are invalid

B) the OLS estimator is biased

C) instrumental variable techniques have to be used

D) the regression R2 can no longer be computed