5

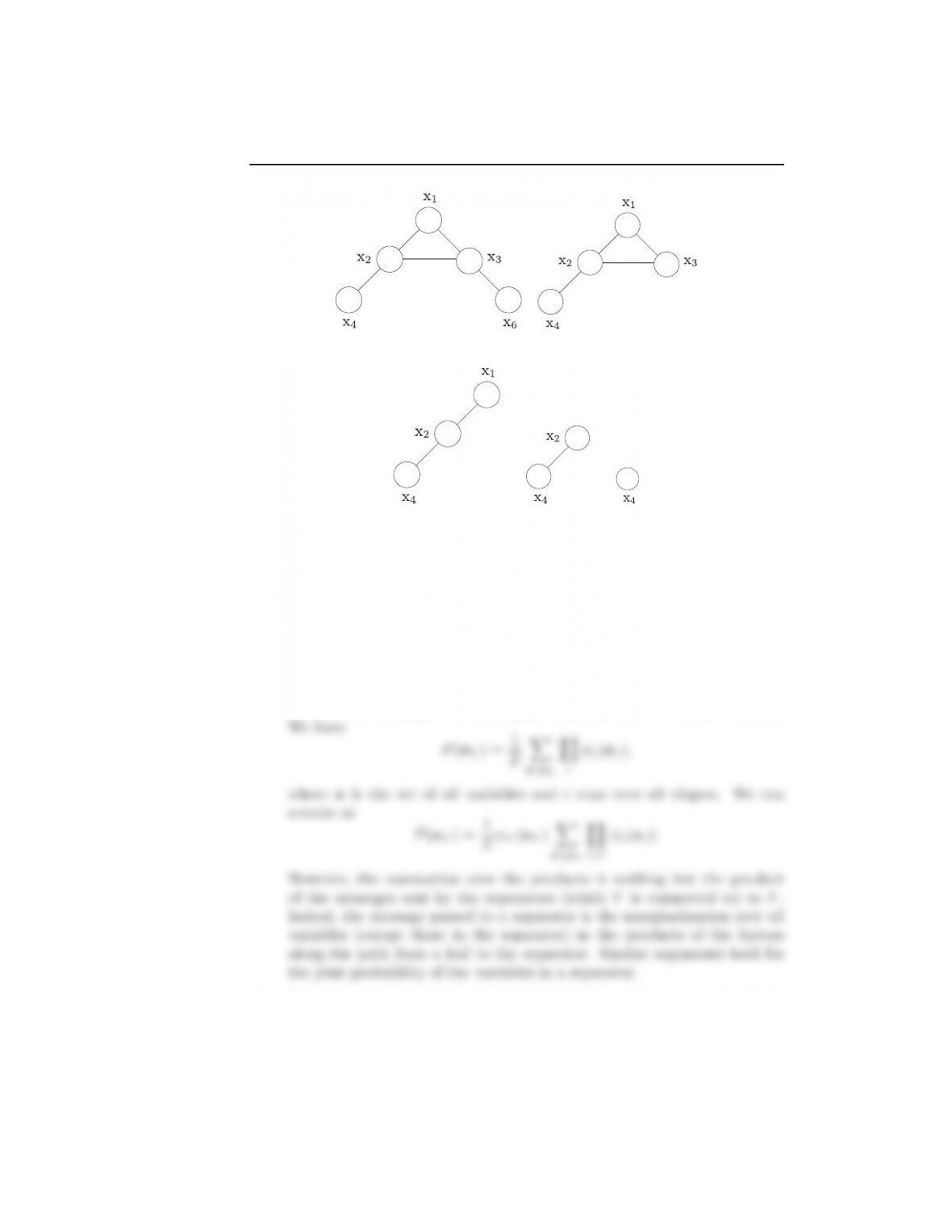

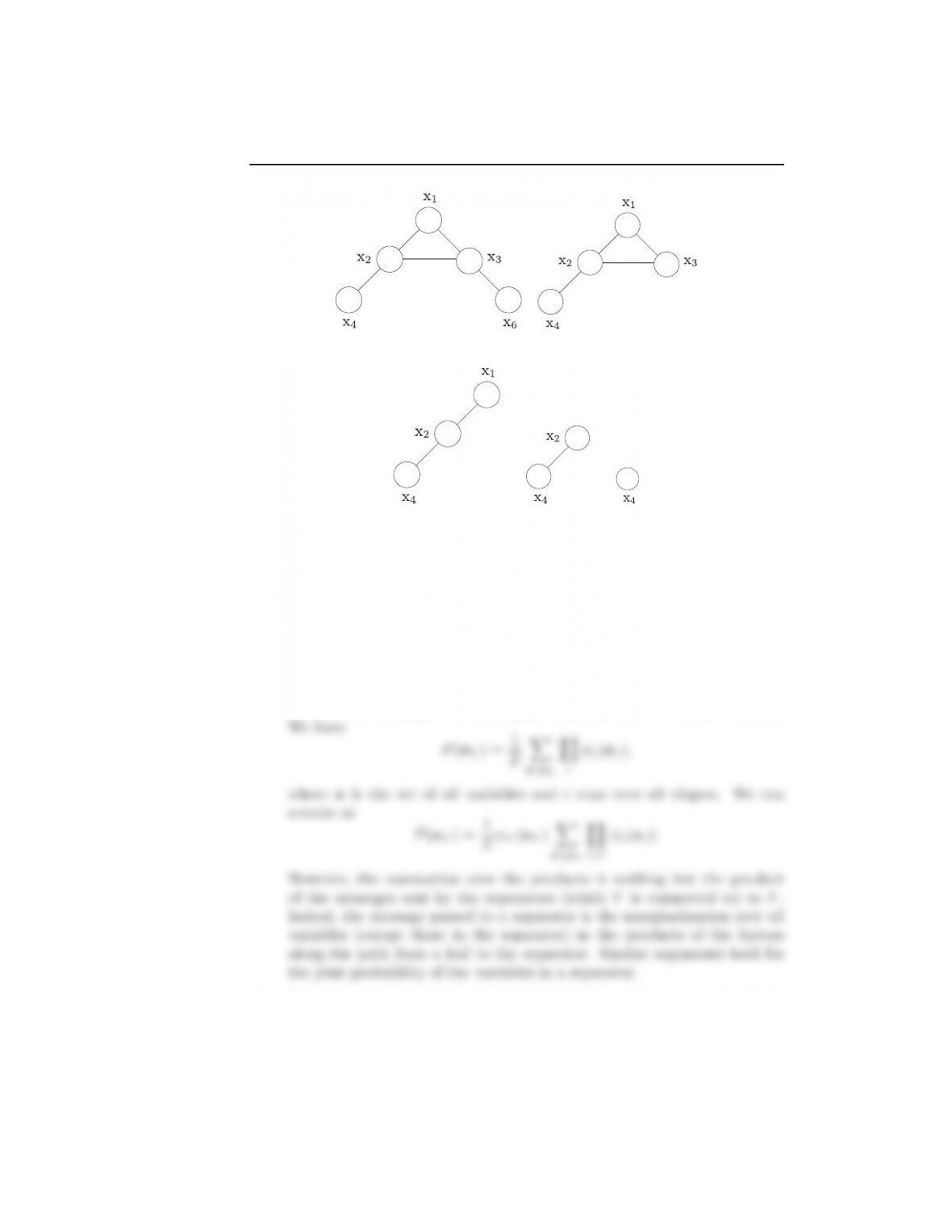

Figure 3: The set up for Problem 16.5

(a) (b)

Figure 4: a) The graph for the Problem 16.6. b) A triangulated graph.

16.6. Consider the graph in Figure 4a. Obtain a triangulated version of it.

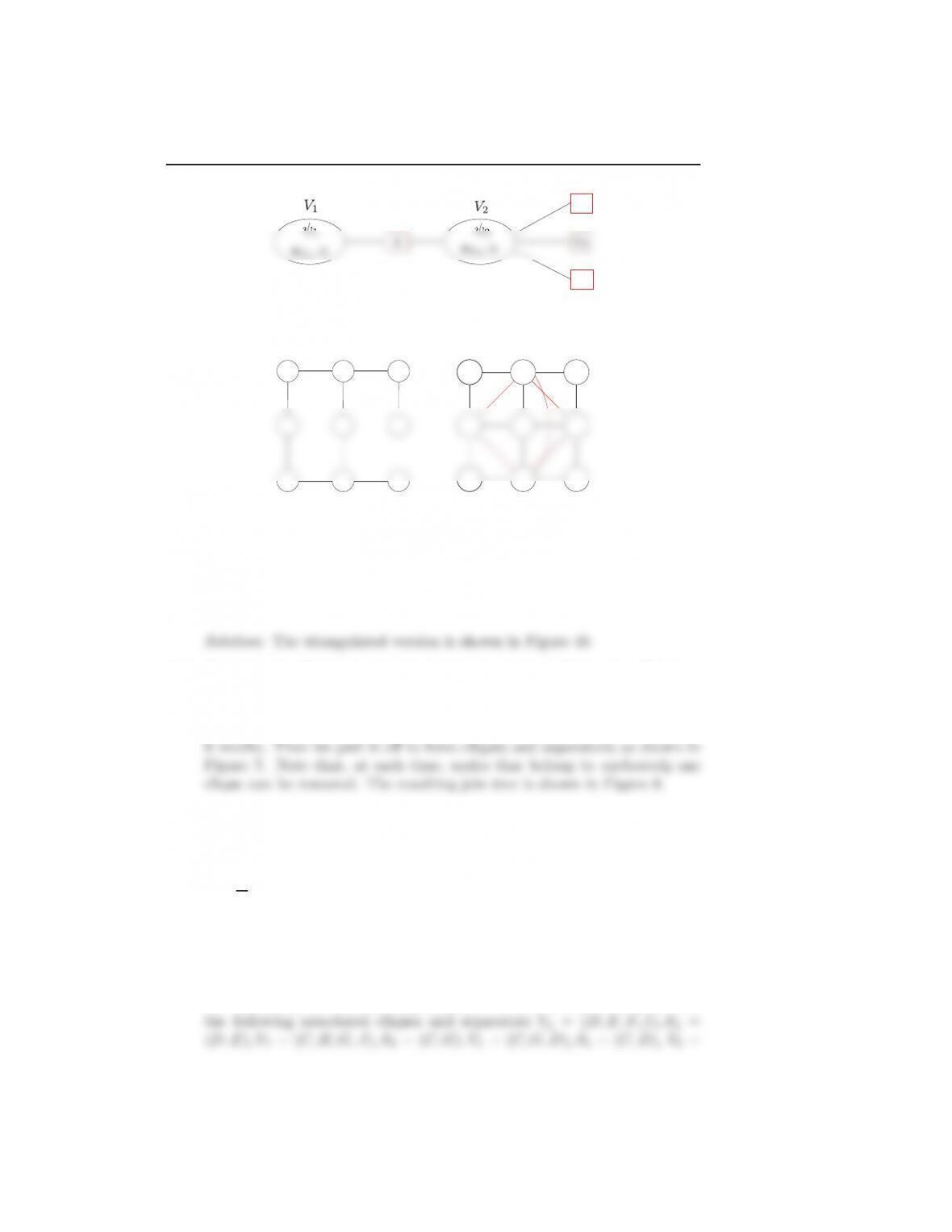

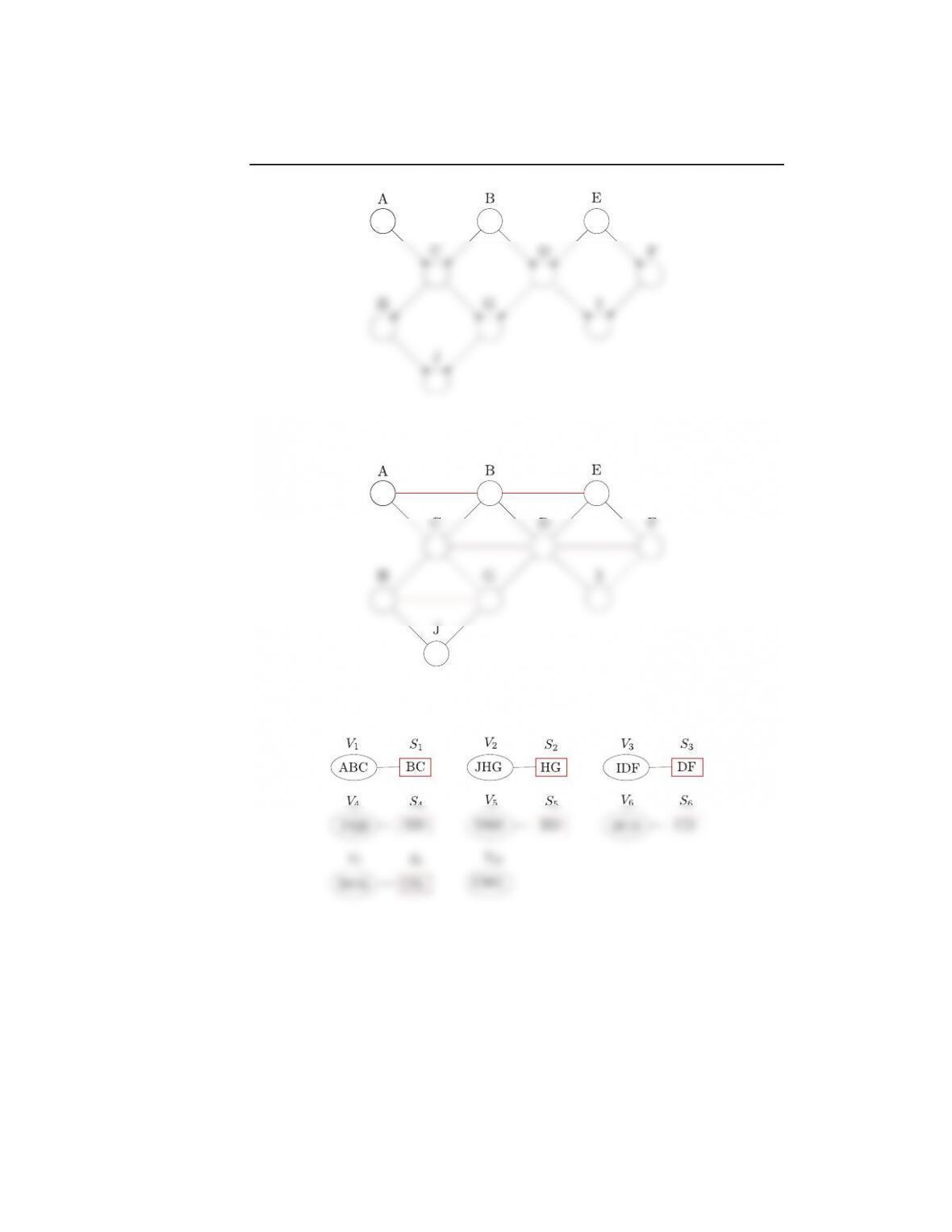

16.7. Consider the Bayesian network structure given in Figure 5. Obtain a

equivalent join tree.

Solution: First, we moralize the network, and the structure of Figure

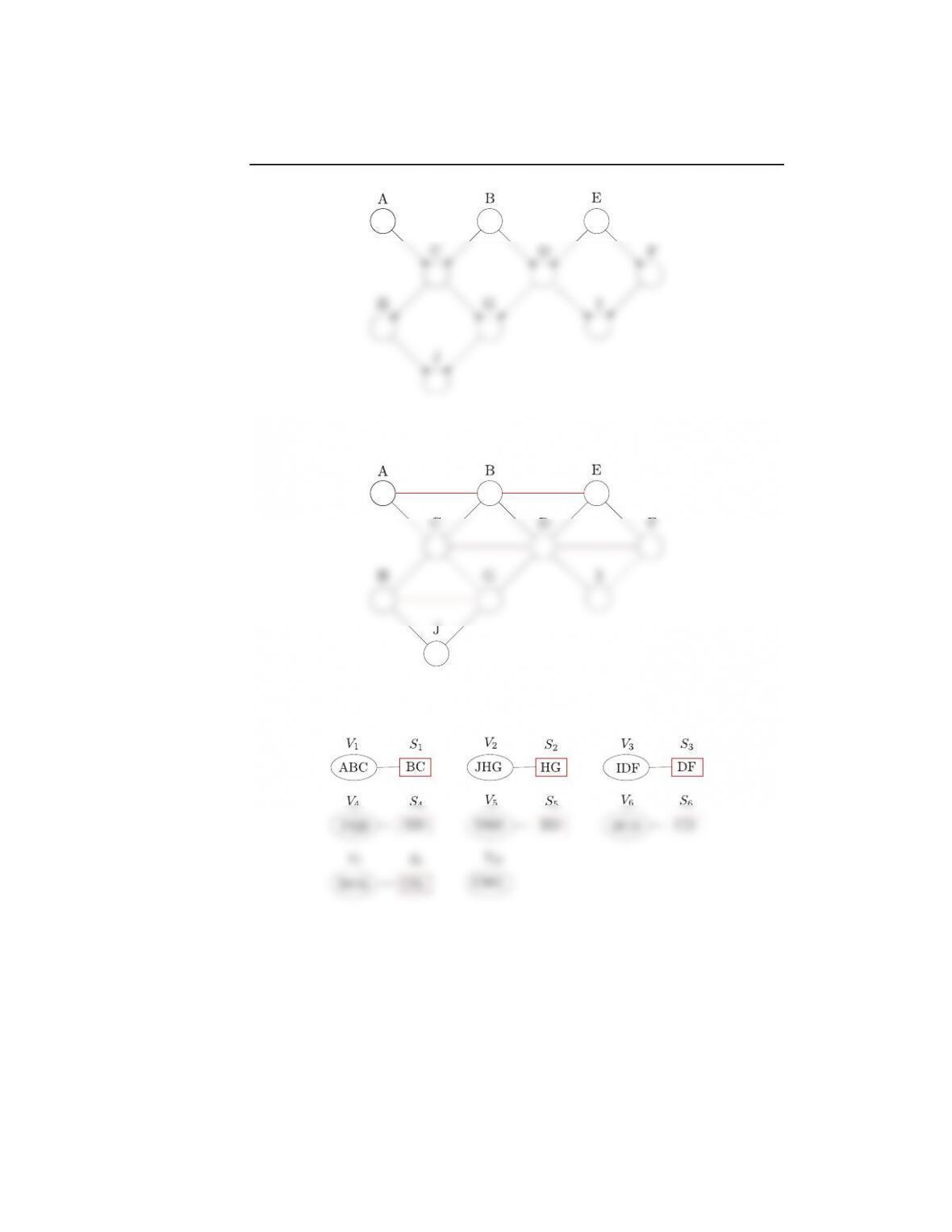

16.8. Consider the random variables A,B,C,D,E,F,G,H,I,J and assume that

the joint distribution is given by the product of the following potential

functions

p=1

Zψ1(A, B, C, D)ψ2(B, E, D)ψ3(E, D, F, I)ψ4(C, D, G)ψ5(C, H, G, J)

Construct an undirected graphical model on which the previous joint prob-

ability factorizes and in the sequence derive an equivalent junction tree.

Solution: The corresponding graph is shown in Figure 6. The nodes

are eliminated in the following sequence: F,I,H,J,G,E,D,C,B,A with