=Pxk+1 · · · PlQl

i=1 P(xi|Pai)

Pxk· · · PlQl

i=1 P(xi|Pai):= A

B

The numerator Abecomes

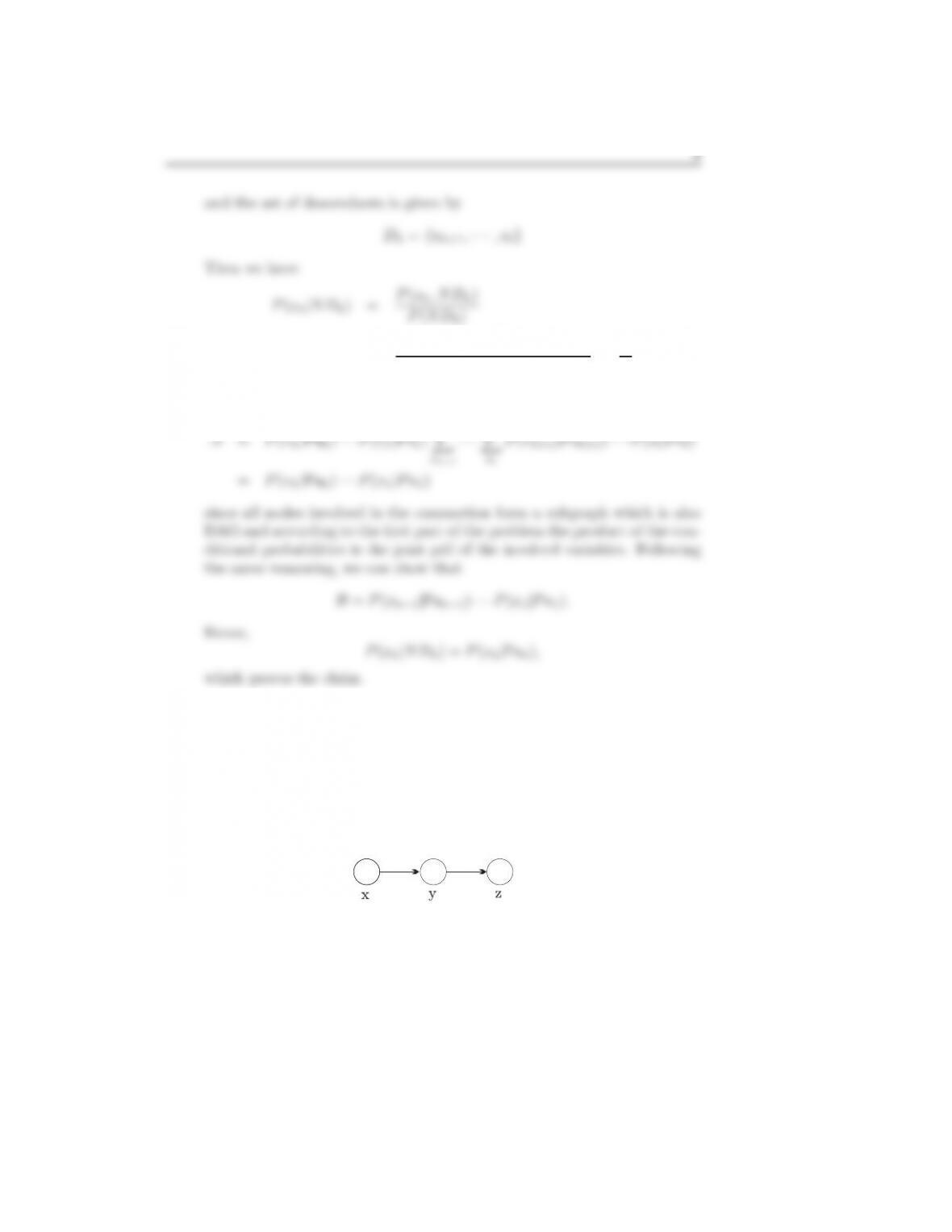

15.5. Consider the graph in Figure 1. The r.v. x has two possible outcomes,

with probabilities P(x1) = 0.3 and P(x2) = 0.7. Variable y has three

possible outcomes with conditional probabilities,

P(y1|x1) = 0.3, P (y2|x1) = 0.2, P (y3|x1) = 0.5,

P(y1|x2) = 0.1, P (y2|x2) = 0.4, P (y3|x2) = 0.5.

Finally, the conditional probabilities for z are

Figure 1: Graphical Model for Problem 15.5

P(z1|y1) = 0.2, P (z2|y1) = 0.8,

P(z1|y2) = 0.2, P (z2|y2) = 0.8,

P(z1|y3) = 0.4, P (z2|y3) = 0.6.