6.7 • Solutions 391

6.7 SOLUTIONS

Notes

: The three types of inner products described here (in Examples 1, 2, and 7) are matched by

examples in Section 6.8. It is possible to spend just one day on selected portions of both sections.

Example 1 matches the weighted least squares in Section 6.8. Examples 2–6 are applied to trend analysis

in Seciton 6.8. This material is aimed at students who have not had much calculus or who intend to take

more than one course in statistics.

For students who have seen some calculus, Example 7 is needed to develop the Fourier series in

Section 6.8. Example 8 is used to motivate the inner product on C[a, b]. The Cauchy-Schwarz and

triangle inequalities are not used here, but they should be part of the training of every mathematics

student.

1. The inner product is

11 2 2

,4 5xy xy xy⟨⟩=+

. Let x = (1, 1), y = (5, –1).

a. Since

2

|| || , 9,xx=⟨⟩=x

|| x || = 3. Since

2

|| || , 105,yy=⟨⟩=y

|| || 105.=y

Finally,

22

|, |15 225.xy⟨⟩==

2. The inner product is

11 2 2

,4 5.xy xy xy⟨⟩=+

Let x = (3, –2), y = (–2, 1). Compute that

3. The inner product is ⟨ p, q⟩ = p(–1)q(–1) + p(0)q(0) + p(1)q(1), so

4. The inner product is ⟨ p, q⟩ = p(–1)q(–1) + p(0)q(0) + p(1)q(1), so

22

3,32tt t

−+⟩=

5. The inner product is ⟨ p, q⟩ = p(–1)q(–1) + p(0)q(0) + p(1)q(1), so

222

, 4,4 34550pp t t⟨⟩=⟨++⟩=++=

and

|| || , 50 5 2ppp=⟨⟩==

. Likewise

6. The inner product is ⟨ p, q⟩ = p(–1)q(–1) + p(0)q(0) + p(1)q(1), so

22

,3,3pp tt tt

⟩=⟨−−⟩=

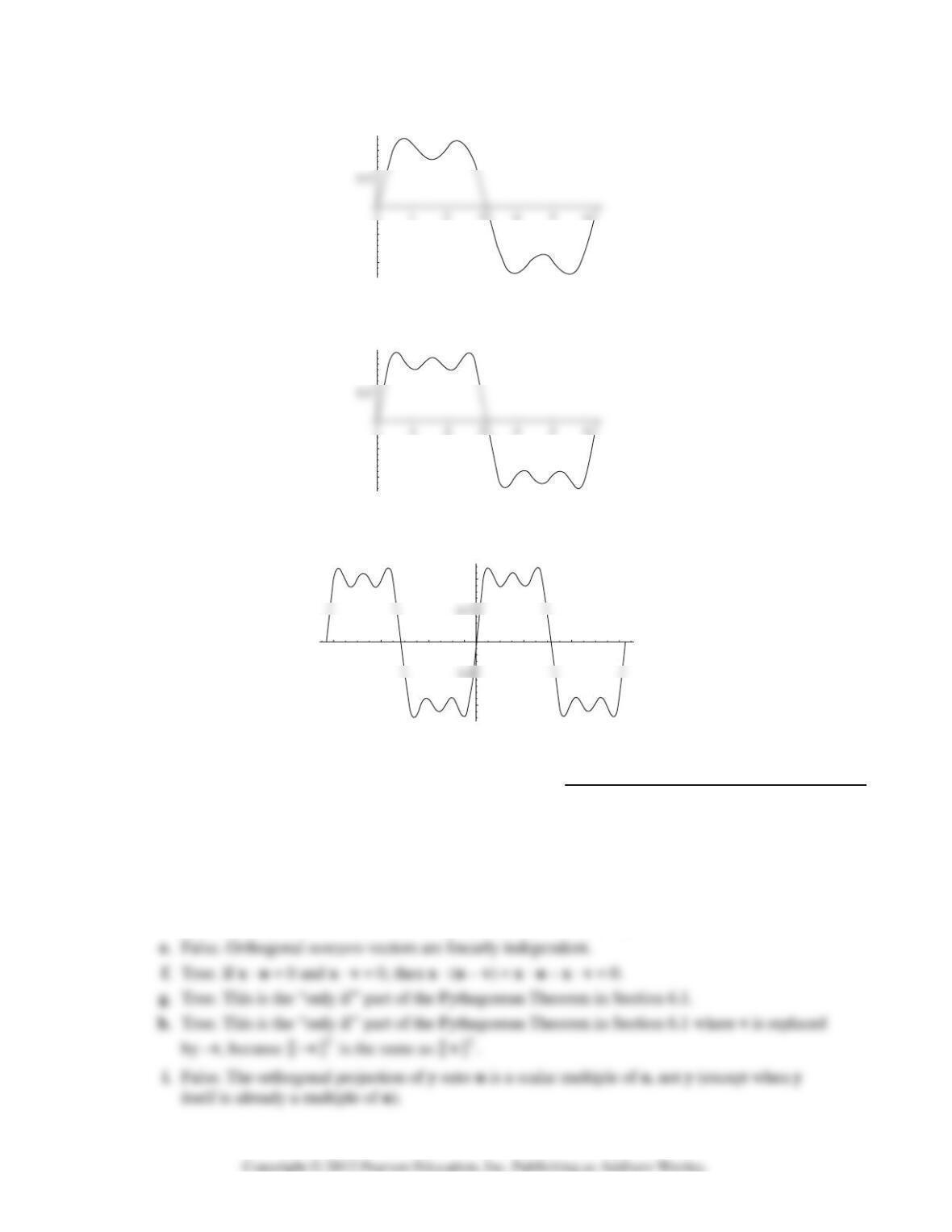

7. The orthogonal projection

ˆ

q

of q onto the subspace spanned by p is

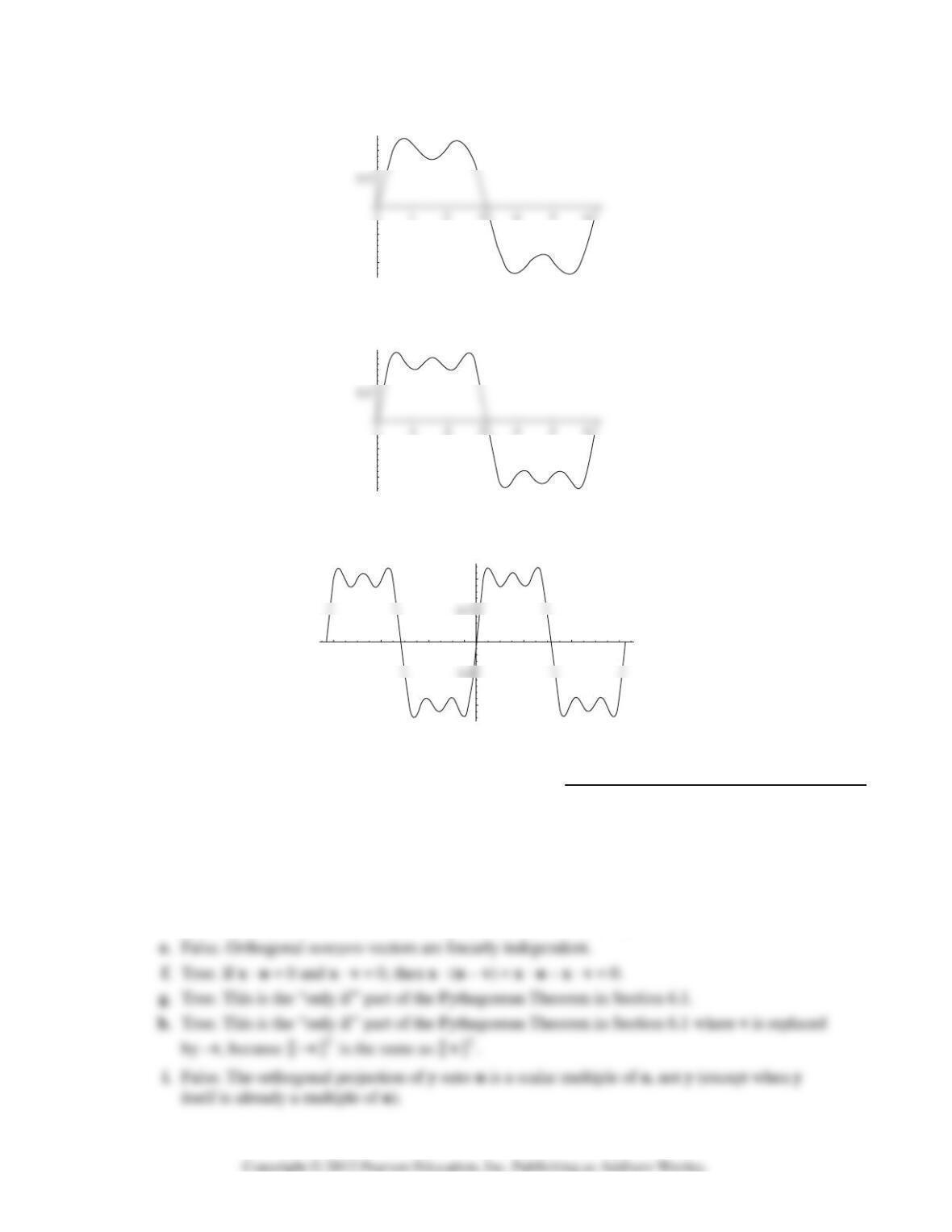

8. The orthogonal projection

ˆ

q

of q onto the subspace spanned by p is